The Power Of A/B Testing in Product Development

In the world of information overload and data noise, it is becoming harder to take decisions. A/B testing has proven to be a great method for making more confident and data-driven decisions.

Grace Hopper has said:

One accurate measurement is worth a thousand expert opinions.

It is hard to disagree. According to McKinsey Global Institute 25-45% of ideas fail. More than that, you have to assume that the best ideas in your backlog require multiple iterations in order to achieve the goal that you have set.

To eliminate the guess game, it has become a standard to use A/B testing to find out what is the best solution to a given user problem.

WHAT #

Harvard Business School defines an A/B in the following way:

A/B testing, at its most basic, is a way to compare two versions of something to figure out which performs better.

In other words, AB testing is an experiment where two or more variants of a page/screen are shown to users at random, and statistical analysis is used to determine which variation performs better for a given conversion goal.

These days we mainly associate A/B test with online tests, though the ones we are using nowadays are only dated from 1990s, everything started 100 years ago.

The scientist Ronald Fisher was the first one to experiment with different samples and introduce the null hypothesis and statistical significance, that scientists worldwide still use today. And it all started very interestingly with a friendly bet with one of his colleagues during the station’s four o’clock tea breaks.

Fischer poured a cup of tea with milk for Muriel Bristol and she refused to drink it because he poured milk first and insisted that she can tell the difference in taste between tea-first and milk-first.

With a help of William Roach, it was decided to make an experiment in which Bristol was supposed to prove she can feel the difference in flavour by trying samples of tea and tell, if they were tea- or milk-first.

To everyone’s and especially Fischer’s surprise she guessed all of them right.

It was a devastating public defeat for Fischer but if not that, perhaps he would be behind the development of methods in statistics that we use up until today.

WHY #

Today we are far from the manual calculations that Fischer used back then, since there are multiple smart tools to AB test and most of our job is shifted towards defining the user problem, forming a hypothesis, creating prototypes, and outlining success metrics.

We already know that 1/3 of ideas fail, therefore, an AB test helps take the guesswork away and make well-informed, data-driven decisions. Due to the test the impact can be estimated and a test with positive influence can be chosen to be launched to the users.

Moreover, you and your team will feel more confident about the decision that you make or you will have a way stronger argument for or against a launch when you need to present the idea to the management.

WHEN #

From choosing a colour of a button to defining a better user flow — this is how wide it can get when in comes to a deciding to run an A/B test. Most important is to set up a meaningful test due to which you can not only improve on a specific business metric, but also on the user experience.

A/B test is also called a slip test, therefore, the answer to “when” to go for an A/B test — is when you have 2 or more samples of a page/screen that you want to test against each other for a defined set of metrics.

It is considered that the test is most effective when you choose for one change. Then you can be sure of what actually influences test metrics. But as mentioned before, an A/B test can also be build on user flows. Pay attention to the changes that you apply: if you make too many, later you will find it hard to identify what has led to the success or to the insignificance of your test results.

HOW #

AB testing is a very goal orientated process. The reason to set up an AB test is to identify whether a change that you are planning to implement has a positive influence on your business metrics. AB tests can be as small as changing the colour of the button, to testing another flow.

1. Define the hypothesis: the first step is going to be, based on the user problem, identifying the hypothesis which consists of the user problem, solution and assumption of the implication on the business KPIs.

For example, imagine a book store app with such a hypothesis: “We know that 30% of our users struggle to notice the “apply promo code” button and therefore bounce from the purchase flow, by adding a black background to the button we can ensure a higher CTR on the “apply promo code” button and increase the number of orders by 6,4%.”

This can not only help you prepare for the test, but also bring you clarity on your final goal. Sure enough, to create the hypothesis you need to look into the data to be able to back up you user problem and business goal with numbers. Spend most of your time on defining the user problem (90%) and only the rest of the time on the solution and target results.

2. Build a prototype, define the test metrics.

Prepare the prototype and discuss with the team. It happens very often that in the rounds of discussion many things on the prototype can change. Try to choose one that requires the least of the effort, this way you won’t lose the precious time and won’t get attached to your idea so that if your hypothesis is not validated, you are not reacting negatively to the outcome.

It is always helpful to divide metrics to primary and secondary. Primary metric is the one that you want to have the highest impact on. In the example above — clicks on the “apply promo code” button.

Secondary metrics are the ones that can be indirectly influenced with your test, therefore making sure that those are being tested is very important. It can happen that with adding a background to the button you are able to increase the clicks on it, but you might harm the number of items in the basket because the users start to pay more attention to the total sum and start to delete the items. To be sure, you don’t have a negative influence on other important KPIs, you want to include them in the secondary metrics’ list.

For the given example, these can be books added to favourite, books added to the basket, deleted items in the basket.

Apart from the metrics you should choose for the traffic distribution and target audience. The first identifies to what portion of your user base you want to show this experiment. And target audience depends on business specifics such as country, user segment, etc. In the given example a target group can be users that have at least 1 item in their basket in the German market. It takes a bit of more effort to identify distribution.

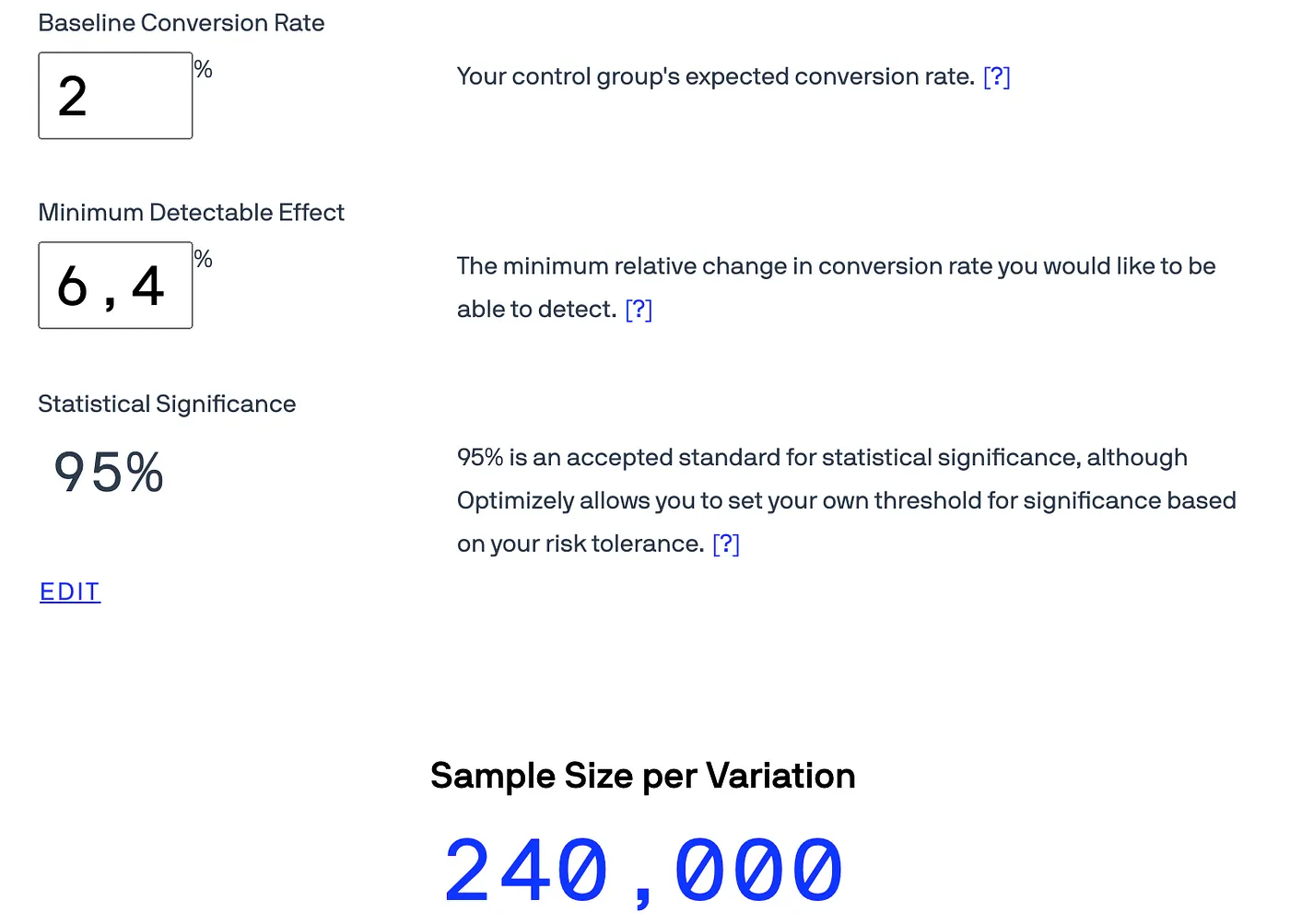

When you create your hypothesis you are estimating the change that you want to see in a specific business metric. Optimizely has a great calculator that helps you identify to how many users you should show your test in order to get to the percentage change that you are striving for with a given level of statistical significance. In our case, let’s say the current conversion rate is 2% and you are expecting the number of orders to increase by 6,4%. Applying these numbers to the calculator we can see that we shall have 240,000 audience per variation. Say, we know that on a monthly basis, we get 3m people with 1 item in the basket. That means that we should distribute the test to 16% (240.000x2/3.000.000) of our users with 1 item in the basket in order to reach the level of 95% of statistical significance.

3. Run the experiment

Launch your test and wait for the users to participate.

Based on the distribution and proportions of the traffic that you identified visitors will be randomly assigned to either the control or variation groups. Their interactions with each experience is measured, counted, and compared to determine how each performs.

4. Analyse the results

It is great is your test is statistically significant and you have validated your hypothesis. But if the test didn’t reach significance in the metrics, it is still an outcome full of learning. That would mean that the changes that you have applied are not delivering a positive influence on the test metrics.

Important here is not to keep the test for multiple months waiting to reach the significance. If the test is live for more than 3 weeks and statistical significance is not reached, most likely it won’t reach it.

Also think about it, if in a month time you are not able to have an impact on the desired business KPIs, most likely the launch of the change is not going to be noticeable either.

Learn from not seeing an impact instead of wasting your time on waiting for reaching statistical significance. Better invest those precious hours on investigating other hypothesis and methods of solving your user problem.

In the case when your metrics are statistically significant, go on with applying the changes that you’ve tested. But also don’t stop here, even ideas that bring positive influence on the business KPIs still might need iterations and improvements. Never stop making your ideas better and more user-centred.

HOW TO READ THE RESULTS #

According to Kaiser Fung 80 to 90 percent of the A/B tests result in no statistical significance.

For the management this might seem like a waste of time, but looking at this from another angle we can see that it is a success because you have disproved your hypothesis and that will save you precious time.

Important to remember that inconclusive results can also be an outcome of the way the test was set up. With time and better understanding of the product you will learn how to build a test that will yield you significant results.

For example, in my team we are using Optimizely as a tool, but sure enough most of the existing AB testing platforms are following the same logic. Optimizely treats your primary metric as a priority and puts its all efforts into getting statistical significance in that metric. The next 5–6 metrics are boxed into one group and Optimizely is trying to achieve significance for them at the similar level. Keep this in mind when you plan for the test set-up.

The purpose of the AB testing is in continuous iteration. There is no perfect solution, it can always be better. So no matter, if you have a winning solution or not, it is recommended to go on with finding a better way of solving your customer problem. In other words, in a test where version B didn’t gain enough of statistical significance to be rolled out to all users, was still a valuable test. You learnt that your assumption was wrong. Time to move on to the next solution.

When you move from optimising a key metric to optimising the customer experience, you get to the point where your tests are serving a bigger goal and contributing to a wholistic idea of an excellent user journey.

TAKE-AWAYS #

-

A/B testing takes its beginning in the beginning of 20th century with works of Ronald Fischer.

-

A/B testing helps you take data-driven decision and feel more confident about product and feature development choices.

-

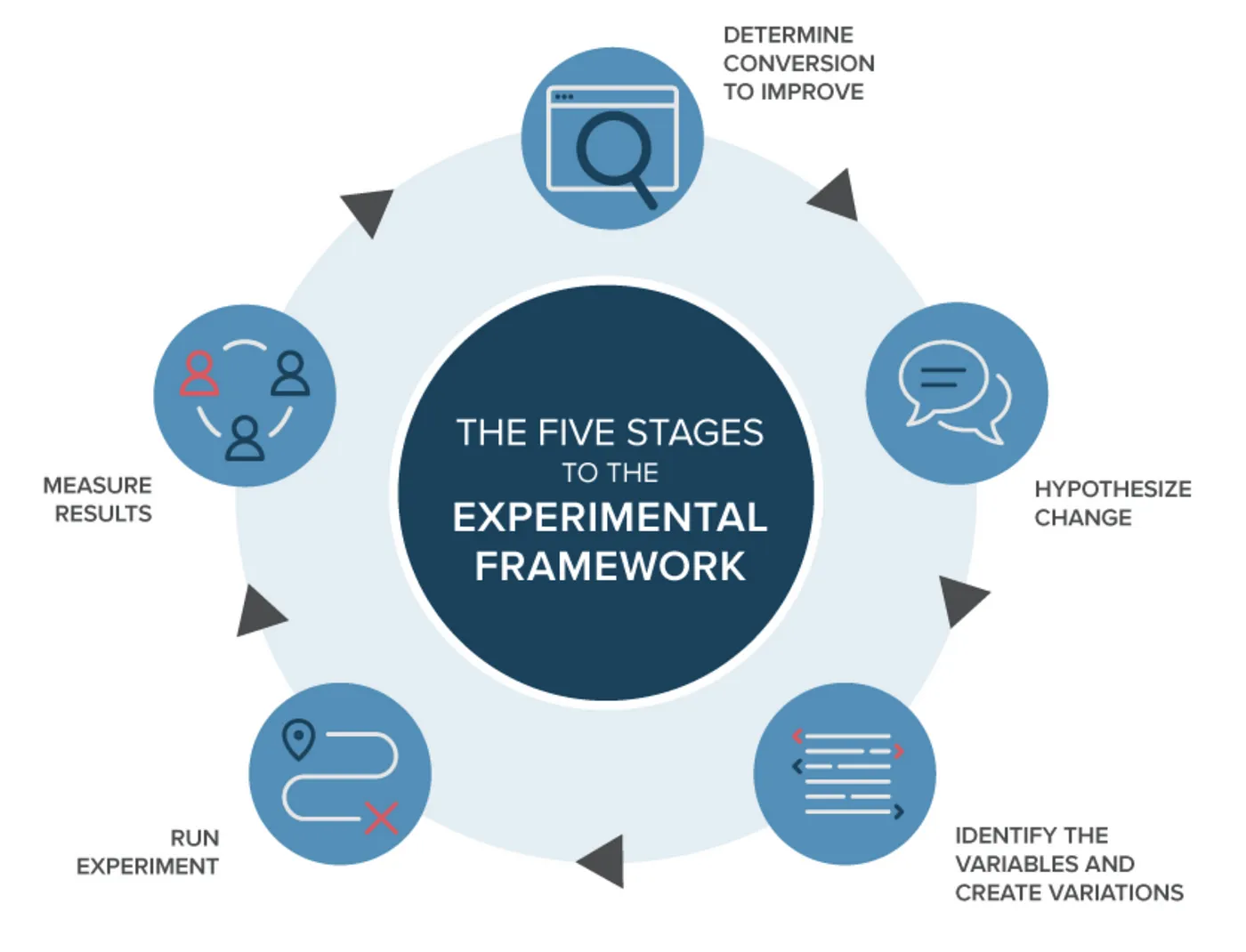

A/B testing process consists of forming a hypothesis, defining success metrics, building a prototype, running the test, analysing the results and answering the question “what is next”.

-

If your test yields insignificant results for more than 3–4 weeks, take it as a result. Insignificance of your test metrics only means that you solution won’t make a change to an existing problem. GREAT! Move on to another solution, another hypothesis, a different test set-up. Don’t waste your time on waiting.

-

The more you practice A/B testing, the more you understand that it is not about improving a metric, but about improving the user experience.

Sources:

A/B Testing: Optimizely glossary

https://www.optimizely.com/optimization-glossary/ab-testing/#:~:text=AB testing is essentially an,for a given conversion goal.

A/B Test Planning: How to Build a Process that Works

https://cxl.com/blog/how-to-build-a-strong-ab-testing-plan-that-gets-results/

How to Do A/B Testing: A Checklist You’ll Want to Bookmark

https://blog.hubspot.com/marketing/how-to-do-a-b-testing

A Refresher on A/B Testing by Amy Gallo

https://hbr.org/2017/06/a-refresher-on-ab-testing

Conversion Best Practices Toolkit: Grow your business by improving conversion rates at every step of the customer journey

https://assets.ctfassets.net/zw48pl1isxmc/5v0NU8QCC0X2B6kxt8RPAO/343358a0805df9b9b327cc12b4dca9cb/ebook_Conversion-best-practices-toolkit-2021.pdf

Yes, A/B Testing Is Still Necessary by Kaiser Fung

https://hbr.org/2014/12/yes-ab-testing-is-still-necessary

Senior Product Manager at AutoScout24